Convolutional neural networks (CNNs) are advanced algorithms designed for processing visual data. Inspired by the human visual cortex, they excel at analyzing images and videos. These networks use mathematical operations called convolutions to extract features, making them highly efficient for tasks like object detection and classification.

CNNs have become a cornerstone of computer vision. They power applications such as self-driving cars, medical imaging, and facial recognition. Unlike traditional neural networks, CNNs are optimized for spatial data, thanks to their unique architecture.

The core components of CNNs include convolutional layers, pooling layers, and fully connected layers. These layers work together to process and interpret visual information. Parameter sharing within the network further enhances its efficiency, reducing computational costs.

Since the breakthrough of AlexNet in 2012, CNNs have dominated the field of computer vision. Their ability to handle complex visual tasks continues to drive innovation across industries.

Introduction to CNN Models in Deep Learning

CNNs are transforming industries by automating image analysis tasks. These networks use a grid-processing architecture with convolution operations to extract meaningful features from visual data. Unlike traditional neural networks, CNNs are optimized for spatial data, making them highly efficient for tasks like object recognition and classification.

One of the key strengths of convolutional neural networks is their ability to achieve translation invariance. This means they can recognize objects regardless of their position in the image. Additionally, parameter sharing reduces the number of parameters, making CNNs faster and more scalable than fully connected networks.

What is a CNN Model?

A convolutional neural network is a specialized neural network designed for processing grid-like data, such as images. It consists of multiple layers, including convolutional, pooling, and fully connected layers. These layers work together to identify hierarchical features, from simple edges to complex patterns.

Why are CNN Models Important in Deep Learning?

CNNs play a critical role in enabling real-time image processing systems. They are widely used in AI-powered medical diagnostics, achieving impressive results like 80% accuracy in detecting diabetic retinopathy. In autonomous vehicles, CNNs enhance perception systems, ensuring safer navigation.

“CNNs have revolutionized how machines interpret visual data, paving the way for groundbreaking advancements in AI.”

Their ability to automate processing and extract features efficiently makes them indispensable in modern technology. From healthcare to transportation, CNNs continue to drive innovation across industries.

Understanding the Basics of CNN Models

Deep learning has reshaped how machines interpret complex data, with convolutional neural networks leading the charge. These advanced systems excel at processing visual information, making them indispensable in fields like healthcare, autonomous driving, and security.

What is Deep Learning?

Deep learning is a subset of machine learning that uses hierarchical layers to extract meaningful patterns from data. Unlike traditional methods, it learns features automatically, reducing the need for manual intervention. This approach has proven highly effective for tasks like image recognition and natural language processing.

How CNN Models Differ from Traditional Neural Networks

Traditional neural networks rely on fully connected layers, which can be inefficient for large datasets. In contrast, convolutional neural networks use localized receptive fields and weight sharing, significantly reducing the number of parameters. For example, a 100×100 image requires 25x fewer parameters in a CNN compared to a traditional network.

CNNs also introduce convolutional layers and pooling layers to preserve spatial hierarchy. These layers identify patterns ranging from simple edges to complex objects, ensuring robust feature extraction. Additionally, pooling layers help prevent overfitting by downsampling the data.

Real-world applications, like MNIST digit recognition, showcase the accuracy improvements achieved by CNNs. Their architecture is optimized for GPU processing, making them faster and more scalable than traditional networks.

The Architecture of a CNN Model

The layered structure of CNNs enables them to extract features from visual inputs. This architecture is designed to process spatial data efficiently, making it ideal for tasks like image recognition and classification. The three main components—convolutional layers, pooling layers, and fully connected layers—work together to transform raw images into actionable insights.

Convolutional Layers

Convolutional layers are the core of CNN architecture. They apply filters (typically 3×3 or 5×5) to the input image, sliding them across the data with specific strides and padding options. This process extracts feature maps, highlighting patterns like edges and textures. The activation function, often ReLU, introduces nonlinearity, enabling the network to learn complex features.

Pooling Layers

Pooling layers reduce the spatial dimensions of the feature maps, making the network more computationally efficient. Max pooling, for example, selects the highest value from a 2×2 window, preserving the most important features while reducing the data size by 75%. Average pooling, on the other hand, calculates the mean value, offering a smoother representation.

| Pooling Type | Operation | Advantages |

|---|---|---|

| Max Pooling | Selects maximum value | Preserves dominant features |

| Average Pooling | Calculates mean value | Reduces noise |

Fully Connected Layers

The fully connected layers come at the end of the network, combining all extracted features for final classification. These layers analyze the processed data and assign probabilities to different classes, enabling tasks like object recognition. In models like VGG-16, stacking multiple convolutional layers before the fully connected ones enhances accuracy and robustness.

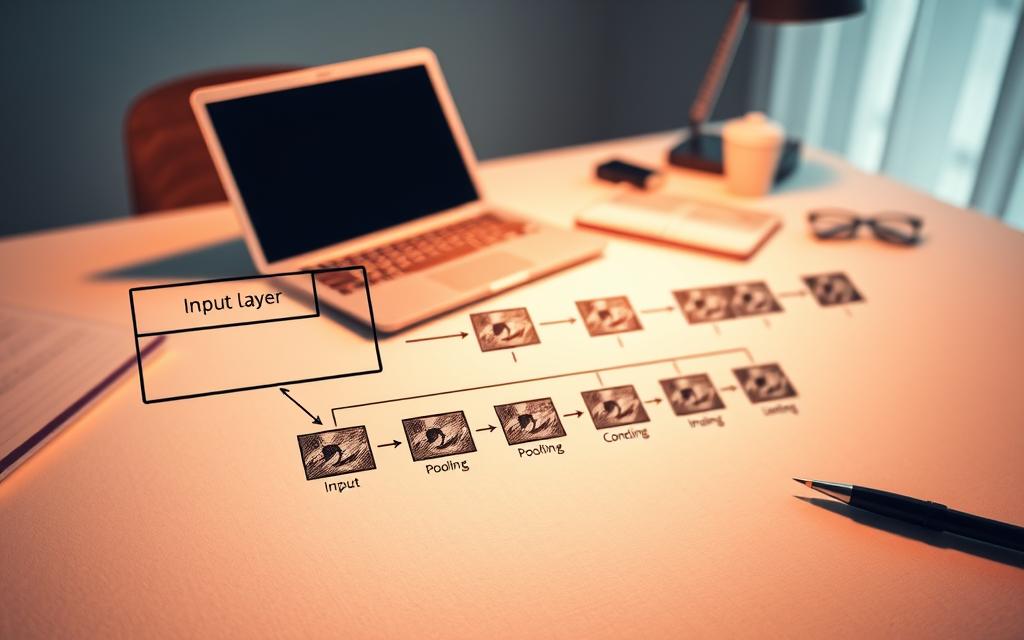

How CNN Models Work: A Step-by-Step Guide

Understanding the inner workings of convolutional neural networks begins with processing visual data step by step. These networks transform raw images into actionable insights through a series of structured operations. Let’s break down the process into four key stages.

Input Image Processing

The first step involves preparing the input image for analysis. This includes resizing the image to a standard format, such as 224×224 pixels, and normalizing pixel values to a range of 0 to 1. These preprocessing steps ensure consistency and improve the efficiency of the network.

Feature Extraction through Convolution

Next, the network applies convolution to extract meaningful patterns from the image. Filters, like the Sobel filter, slide across the image to detect edges and textures. The resulting feature maps highlight essential details, such as vertical or horizontal lines.

Downsampling with Pooling

After feature extraction, pooling reduces the spatial dimensions of the data. Max pooling, for instance, selects the highest value from a 2×2 window, preserving dominant features while reducing computational load. This step enhances the network’s ability to generalize and prevents overfitting.

Classification with Fully Connected Layers

Finally, the network uses fully connected layers to perform classification. These layers analyze the processed data and assign probabilities to different categories, such as identifying a cat or dog in an image. The softmax function ensures a clear probability distribution for accurate results.

Key Components of CNN Models

Filters, activation functions, and feature maps form the backbone of convolutional neural networks. These elements work together to process visual data efficiently, enabling tasks like image recognition and classification. Understanding their roles is essential for grasping how these networks achieve high accuracy.

Filters and Kernels

Filters are small matrices used to extract features from input data. Common sizes include 3×3 and 5×5, with 3×3 being standard in networks like VGG. These filters slide across the image, detecting patterns such as edges, textures, and shapes.

Different kernel types serve specific purposes. For example, edge detection kernels highlight boundaries, while blur kernels smooth out noise. Sharpen kernels, on the other hand, enhance details. Choosing the right filter is crucial for effective feature extraction.

Activation Functions

Activation functions introduce nonlinearity into the network, enabling it to learn complex patterns. The ReLU (Rectified Linear Unit) function is widely used due to its simplicity and effectiveness in reducing vanishing gradient issues.

Other variants like Leaky ReLU and ELU offer additional benefits. Leaky ReLU prevents dead neurons by allowing small negative values, while ELU improves convergence speed. Each function has its strengths, making them suitable for different scenarios.

| Activation Function | Advantages |

|---|---|

| ReLU | Simple, reduces vanishing gradients |

| Leaky ReLU | Prevents dead neurons |

| ELU | Improves convergence speed |

Feature Maps

Feature maps are the output of convolutional layers, representing extracted patterns. These maps decrease in size with each pooling layer, typically by a factor of 2ⁿ. This downsampling reduces computational load while preserving essential features.

Visualization techniques, such as heatmaps, help interpret feature maps by highlighting areas of interest. Depthwise separable convolutions further enhance efficiency by reducing the number of parameters, making the network faster and more scalable.

“The interplay of filters, activation functions, and feature maps is what makes convolutional neural networks so powerful in visual data processing.”

Training CNN Models

Training convolutional neural networks involves a systematic approach to ensure optimal performance. This process includes preparing data, defining loss functions, and optimizing weights through backpropagation. Each step is critical for achieving high accuracy and efficiency in visual data processing.

Data Preparation

Effective training begins with proper data preparation. Large datasets like ImageNet, which includes 1.2 million labeled images, are often used. Techniques such as rotation, flipping, and scaling enhance the dataset’s diversity, improving the model’s ability to generalize.

Batch size selection also plays a crucial role. Smaller batches, like 32, offer better gradient updates, while larger batches, such as 256, improve computational efficiency. Choosing the right balance ensures smoother learning and faster convergence.

Loss Functions and Optimization

Loss functions measure the difference between predicted and actual outputs. Cross-entropy loss is commonly used for classification tasks. It quantifies the error, guiding the model toward better predictions.

Optimizers like Adam, with parameters β1=0.9 and β2=0.999, adjust the weights to minimize the loss. Learning rate scheduling, such as step or cosine annealing, further refines the process, preventing overshooting or stagnation.

| Optimizer | Parameters | Advantages |

|---|---|---|

| Adam | β1=0.9, β2=0.999 | Efficient, adaptive learning rates |

| SGD | Momentum=0.9 | Simple, effective for large datasets |

Backpropagation in CNN Models

Backpropagation updates the weights by calculating gradients of the loss function. This process ensures the model learns from its mistakes, improving accuracy over time. Techniques like gradient clipping prevent exploding gradients, maintaining stability during training.

For video processing, backpropagation through time extends this method to sequential data. Transfer learning, using pre-trained weights from ImageNet, accelerates learning by leveraging existing knowledge.

“Proper training techniques are the backbone of high-performing convolutional neural networks, enabling them to tackle complex visual tasks with precision.”

Evaluating CNN Models

Evaluating the performance of convolutional neural networks is crucial for ensuring their effectiveness in real-world applications. Metrics like accuracy, precision, recall, and F1 score provide insights into how well these models perform in tasks such as image classification and object detection.

ResNet-50, for example, achieves a top-1 accuracy of 76% on the ImageNet dataset. This benchmark highlights the model’s ability to correctly classify images, but it’s only one aspect of evaluation. A comprehensive assessment requires analyzing multiple metrics to understand the model’s strengths and weaknesses.

Accuracy and Precision

Accuracy measures the proportion of correct predictions out of the total predictions made. While it’s a useful metric, it can be misleading in imbalanced datasets. For instance, a model might achieve high accuracy by simply predicting the majority class.

Precision, on the other hand, focuses on the proportion of true positive predictions among all positive predictions. It’s particularly important in applications like medical diagnostics, where false positives can have serious consequences. Balancing accuracy and precision ensures reliable output.

Recall and F1 Score

Recall measures the model’s ability to identify all relevant instances in the dataset. It’s crucial in tasks like fraud detection, where missing a positive case can be costly. However, high recall often comes at the expense of precision.

The F1 score harmonizes precision and recall, providing a single metric that balances both. It’s especially useful for imbalanced datasets, where one class significantly outnumbers the other. For example, in multi-label classification, the F1 score helps evaluate the model’s performance across all classes.

Additional evaluation techniques include interpreting confusion matrices, analyzing ROC curves, and calculating mean average precision (mAP) for object detection. Cross-validation strategies and test-time augmentation further enhance the robustness of the evaluation process. Leaderboard scores from benchmarks like ImageNet and COCO provide comparative insights into model performance.

Applications of CNN Models

From healthcare to retail, CNNs are driving innovation across diverse sectors. These advanced algorithms excel at handling complex visual tasks, making them indispensable in modern technology. Their ability to automate processing and extract meaningful patterns has led to groundbreaking advancements in various fields.

Image Recognition and Classification

CNNs power tools like Google Lens, achieving 95% accuracy in identifying objects and text in images. These systems are widely used in retail for product recognition and in social media for tagging photos. The ability to classify images with high precision has transformed industries like e-commerce and entertainment.

Object Detection

YOLOv4, a state-of-the-art CNN, achieves 65 FPS on Tesla V100, enabling real-time detection of objects in videos. This technology is crucial for autonomous vehicles, where identifying pedestrians, vehicles, and obstacles ensures safe navigation. Additionally, Mask R-CNN is used for instance segmentation, providing detailed object outlines in complex scenes.

Medical Image Analysis

CNNs are revolutionizing healthcare by automating the analysis of medical scans. FDA-approved systems use these networks to detect abnormalities in mammograms with high accuracy. During the COVID-19 pandemic, CNNs were employed to identify infections from CT scans, aiding in faster diagnosis and treatment.

Other notable applications include:

- DALL-E for generating creative images from text descriptions.

- Amazon Go’s cashierless system, which relies on CNNs for product tracking.

- Agriculture, where CNNs identify plant diseases from leaf images.

- Wildlife conservation, using camera traps to classify species.

- Astronomy, analyzing galaxy morphology for research purposes.

| Application | Technology | Impact |

|---|---|---|

| Retail | Amazon Go | Cashierless shopping experience |

| Healthcare | FDA-approved systems | Accurate mammogram analysis |

| Autonomous Vehicles | YOLOv4 | Real-time object detection |

“CNNs are transforming industries by automating complex visual tasks, paving the way for smarter and more efficient systems.”

CNN Models in Computer Vision

Computer vision has become a cornerstone of modern technology, with convolutional neural networks driving its evolution. These advanced systems process visual data with unparalleled accuracy, enabling groundbreaking applications across industries. From autonomous vehicles to facial recognition, CNNs are reshaping how machines interpret the world.

Self-Driving Cars

Autonomous vehicles rely heavily on computer vision to navigate safely. Tesla’s Autopilot, for instance, uses 48 CNN subsystems to process real-time data from cameras and sensors. These networks analyze the input to detect pedestrians, vehicles, and road signs, ensuring precise decision-making.

Sensor fusion, combining LiDAR and camera data, enhances the system’s reliability. Pedestrian detection benchmarks, such as those on the KITTI dataset, showcase the accuracy of CNNs in identifying objects even in complex environments. This technology is critical for achieving Level 5 autonomy, where no human intervention is required.

Facial Recognition

Facial recognition systems powered by CNNs have achieved remarkable accuracy. FaceNet, for example, boasts a 99.63% accuracy rate on the Labeled Faces in the Wild (LFW) dataset using triplet loss. This technology is widely used in applications like iPhone’s Face ID, ensuring secure and seamless authentication.

Beyond security, CNNs enable emotion detection systems, analyzing facial expressions to gauge user sentiment. Pose estimation, another application, is transforming sports analytics by tracking athletes’ movements with precision. These advancements highlight the versatility of neural networks in computer vision tasks.

“CNNs are the backbone of modern computer vision, enabling machines to see and interpret the world with human-like accuracy.”

Other notable applications include OCR for document digitization and industrial quality inspection, where CNNs detect defects with high precision. These systems streamline workflows, reduce errors, and enhance productivity across sectors. For more insights on CNN applications, visit this comprehensive guide.

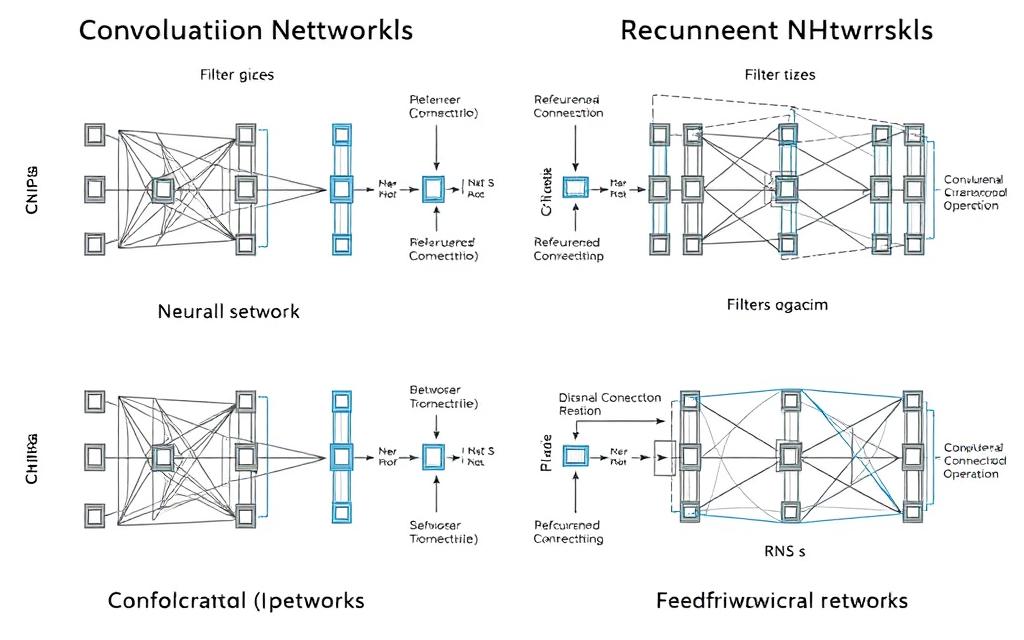

CNN Models vs. Other Neural Networks

Different types of neural networks serve unique purposes in artificial intelligence. While convolutional neural networks excel in spatial data processing, other architectures like RNNs and traditional networks handle different tasks. Understanding these differences is crucial for selecting the right architecture for specific applications.

CNN vs. RNN

Convolutional neural networks are designed for spatial data, such as images, while RNNs process sequential data, like text or time series. RNNs use LSTM gates to retain information over time, making them ideal for tasks like speech recognition. In contrast, CNNs focus on extracting patterns from grid-like data, such as edges and textures in images.

Hybrid architectures, like CNN-RNN, combine the strengths of both. For example, in video analysis, 3D CNNs process spatial-temporal data, while RNNs handle sequential dependencies. This combination enhances performance in complex tasks like action recognition.

CNN vs. Traditional Neural Networks

Traditional neural networks rely on fully connected layers, which require significantly more parameters. For instance, a 50×50 image needs 2500x more parameters in a traditional network compared to a CNN. This inefficiency makes CNNs more scalable for large datasets.

CNNs also introduce convolutional layers and pooling, which preserve spatial hierarchy and reduce computational load. This architecture is optimized for GPU processing, making CNNs faster and more efficient for tasks like image classification.

“The choice of neural network type depends on the data and task at hand, with CNNs leading in visual data processing.”

Other considerations include memory requirements and attention mechanisms. Transformers, for example, use attention to process sequences efficiently, while CNNs focus on local patterns. Understanding these nuances helps in designing effective deep learning solutions.

Benefits of Using CNN Models

The benefits of CNNs extend across various industries due to their unique capabilities. These advanced systems excel in automating complex tasks, making them indispensable in modern technology. Their ability to process visual data efficiently and extract meaningful patterns has revolutionized fields like healthcare, retail, and autonomous driving.

Automatic Feature Extraction

One of the most significant benefits of CNNs is their ability to perform automatic feature extraction. Traditional methods often require manual engineering, which can be time-consuming and error-prone. CNNs reduce this effort by up to 90%, streamlining the process of identifying patterns like edges, textures, and shapes.

For example, MobileNet achieves 70.6% accuracy on the ImageNet dataset with just 4.2 million parameters. This efficiency is made possible by the network’s ability to learn hierarchical features directly from the data, eliminating the need for extensive preprocessing.

Efficiency in Image Processing

CNNs are designed for efficiency in image processing. Their architecture, optimized for GPU acceleration, enables real-time analysis of visual data. Techniques like pruning and quantization further enhance performance by reducing computational load without sacrificing accuracy.

Energy efficiency is another key advantage. CNNs consume less power compared to traditional networks, making them ideal for mobile and embedded applications. This combination of speed and low resource usage ensures scalability across diverse use cases.

Other notable advantages include:

- End-to-end learning, which simplifies the development process.

- Domain adaptation, allowing models to generalize across different datasets.

- Multimodal fusion, enabling the integration of multiple data types for richer insights.

“CNNs redefine efficiency in AI, automating tasks that once required extensive manual effort.”

Challenges and Limitations of CNN Models

While CNNs offer groundbreaking capabilities, they come with notable challenges. These advanced systems require significant resources and face limitations that can hinder their effectiveness in real-world applications. Understanding these issues is crucial for optimizing their use and addressing potential drawbacks.

Training Complexity

Training CNNs is resource-intensive and time-consuming. For example, training on the ImageNet dataset takes approximately 29 hours using 8xV100 GPUs. This process demands high computational power and expertise, making it inaccessible for smaller organizations.

Hyperparameter sensitivity adds another layer of complexity. Selecting the right parameters, such as learning rate and batch size, significantly impacts the model‘s performance. Without proper tuning, the training process can lead to suboptimal results or even failure.

Data Requirements

CNNs rely heavily on large, annotated datasets for effective learning. Medical applications, for instance, often require over 10,000 annotated scans to achieve reliable performance. The cost of annotation, averaging $6 per image for bounding boxes, further escalates expenses.

Domain shift issues also pose a challenge. A model trained on one dataset may struggle to generalize to another, reducing its applicability across different environments. This limitation necessitates extensive retraining and validation.

| Challenge | Impact |

|---|---|

| Annotation Costs | High expenses for labeling data |

| Adversarial Attacks | Vulnerability to manipulated inputs |

| Ethical Bias Risks | Potential for unfair or biased outcomes |

“The challenges of CNNs highlight the need for ongoing research and innovation to overcome these limitations.”

Other issues include memory bottlenecks, black-box interpretability, and ethical bias risks. Addressing these challenges is essential for maximizing the potential of CNNs in diverse applications.

Future Trends in CNN Models

The evolution of convolutional neural networks continues to shape the future of artificial intelligence. As technologies advance, these systems are becoming more efficient, accurate, and versatile. From improved architecture to seamless integration with other AI tools, the possibilities are endless.

Advancements in CNN Architecture

Vision Transformers (ViT) are setting new benchmarks, achieving 88.55% accuracy on the ImageNet dataset. This breakthrough highlights the potential of hybrid architectures that combine the strengths of CNNs and transformers. Neuromorphic chips like Loihi 2 are also making waves, offering 10x energy efficiency compared to traditional hardware.

Key innovations include:

- Neural architecture search (NAS) for automated model design.

- Capsule networks that improve hierarchical feature extraction.

- Quantum CNN prototypes that leverage quantum computing for faster processing.

Integration with Other AI Technologies

The integration of CNNs with other AI technologies is unlocking new capabilities. Federated learning allows multiple devices to collaboratively train models without sharing raw data. This approach enhances privacy and scalability, making it ideal for applications like healthcare and finance.

Other notable trends include:

- Explainable AI techniques that improve transparency in decision-making.

- Edge deployment optimizations for real-time processing on low-power devices.

- Synthetic data generation to address the challenges of limited datasets.

“The future of CNNs lies in their ability to adapt and evolve, driving innovation across industries.”

Conclusion

Convolutional neural networks have become a cornerstone of modern AI, revolutionizing how machines interpret visual data. Their dominance in computer vision is undeniable, powering innovations from self-driving cars to medical diagnostics. Emerging applications in healthcare, such as detecting diseases from image scans, are saving lives and improving outcomes.

With tools like AutoML, these advanced systems are becoming more accessible to developers and researchers. However, ethical implementation remains crucial to ensure fairness and transparency in AI-driven decisions. As deep learning evolves, neuromorphic hardware promises even greater efficiency and scalability.

For those eager to explore, frameworks like Keras and TensorFlow offer hands-on opportunities to experiment with convolutional neural networks. Continued learning and innovation will drive the next wave of breakthroughs in AI and processing technologies.

FAQ

What is a CNN Model?

A CNN model, or Convolutional Neural Network, is a specialized neural network designed for processing structured grid data like images. It uses convolutional layers to automatically detect patterns and features.

Why are CNN Models Important in Deep Learning?

CNN models excel in tasks like image recognition and classification due to their ability to extract hierarchical features efficiently, making them essential in computer vision and related fields.

How do CNN Models Differ from Traditional Neural Networks?

Unlike traditional neural networks, CNN models use convolutional layers to process spatial hierarchies in data, reducing the need for manual feature engineering.

What are Convolutional Layers?

Convolutional layers apply filters to input images to detect features like edges, textures, and patterns, creating feature maps for further processing.

What is the Role of Pooling Layers?

Pooling layers downsample feature maps, reducing their dimensions while retaining important information, which helps in reducing computational complexity.

How do Fully Connected Layers Work?

Fully connected layers take the processed features from convolutional and pooling layers to perform classification or regression tasks.

What are Filters and Kernels in CNN Models?

Filters and kernels are small matrices used in convolutional layers to extract specific features from input data by sliding over the image.

What are Activation Functions in CNN Models?

Activation functions introduce non-linearity into the network, enabling it to learn complex patterns and relationships in the data.

How are CNN Models Trained?

Training involves data preparation, applying loss functions to measure errors, and using backpropagation to adjust weights for better accuracy.

What are the Applications of CNN Models?

CNN models are widely used in image recognition, object detection, medical image analysis, and other computer vision tasks.

How do CNN Models Benefit Image Processing?

They automatically extract features, reducing the need for manual intervention and improving efficiency in handling large datasets.

What are the Challenges of Using CNN Models?

Challenges include high computational requirements, the need for large datasets, and complexity in training deep architectures.

What are Future Trends in CNN Models?

Future advancements include improved architectures, integration with other AI technologies, and applications in emerging fields like autonomous systems.